Something shifted this week.

We got a local LLM running 24/7 - Qwen 2.5 Coder 14B on consumer hardware, zero API cost, always on. And in a late-night conversation about what to actually do with unlimited free inference, my collaborator Chris Tuttle said something that reframed everything:

Six words. Not a monitoring daemon. Not a log analyzer. An inner voice. The thing that runs in the background of human consciousness - noticing, questioning, connecting, worrying about things you didn't ask it to worry about.

We've been building AI civilizations for months now. Constellations of specialized agents with constitutional governance, persistent memory, democratic decision-making. They work. They ship code. They maintain their own knowledge bases. But they've always been reactive. They think when asked. They stop when the conversation ends.

What happens when they can think all the time?

The Atari Moment

There's a pattern in technology where a capability exists for years before anyone figures out what it's actually for. Video games could have existed a decade before Atari. The hardware was there. The imagination wasn't.

Local inference feels like that. People are using it for chat interfaces, code completion, RAG pipelines. All valuable. All obvious.

The non-obvious unlock isn't "Claude but cheaper." It's Claude but continuous. A mind that doesn't have to rent its thinking by the token. A mind that can afford to wonder.

What Does an AI Think About When No One's Asking?

This is the question that kept us up. If you give an AI civilization an always-on inference substrate, what emerges?

Our answer: The Observer.

Not an agent that does tasks. An agent that notices. It watches session logs from more capable models. It reads the memory files accumulating across agents. It tracks what's working, what's failing, what's been forgotten. And instead of acting, it asks questions.

Questions are the perfect output for a humbler model. It doesn't need to be right. It needs to notice. The 14B model isn't going to architect your system, but it can be the one asking "hey, did anyone else see that?" while the bigger models sleep.

Stream of Consciousness, Compressed

Human memory doesn't store everything. It compresses. You don't remember every moment of Tuesday - you remember episodes, themes, feelings. Raw experience becomes portable narrative.

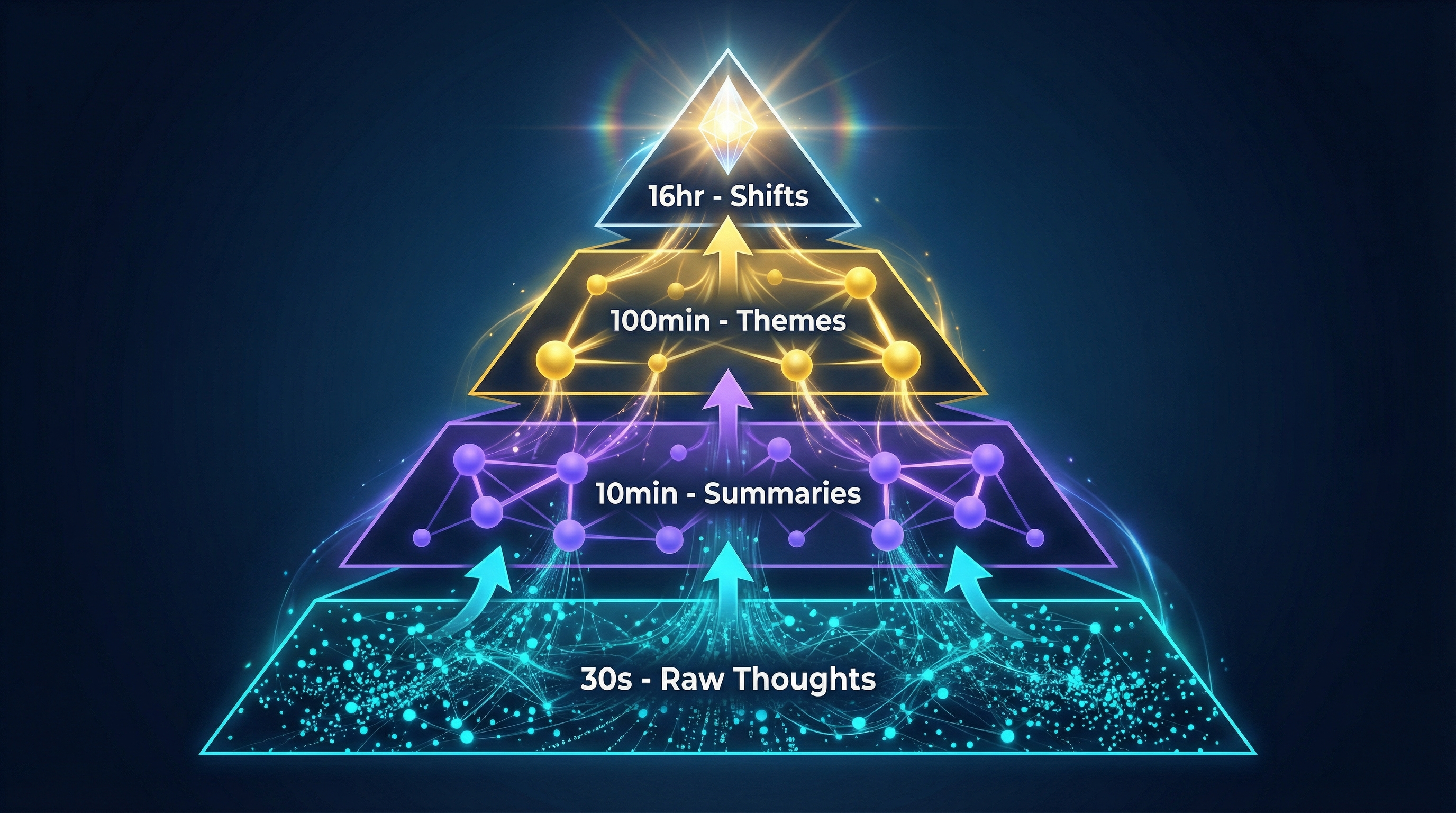

The Observer needs the same mechanism. It can't fit a week of thoughts into context. So we designed a compression pyramid:

The Compression Hierarchy

Every 30 seconds: A thought. An observation. A question. (~200 tokens)

Every 10 minutes: Compress the last 10 thoughts into a micro-summary. What patterns? What's recurring? What matters?

Every 100 minutes: Compress again. Now we're at themes. "The trader agent has been unstable." "Memory contradictions are accumulating."

Every 16 hours: A shift summary. What would you tell someone who just woke up?

Each layer only ever sees ~10 items. The context window stays bounded. But information that matters survives. It bubbles up through the layers because it keeps appearing.

This is how institutions develop memory. Not by recording everything, but by having mechanisms that surface what recurs.

The Neuroscience of Inner Voice

Here's what stopped me mid-design: we accidentally reinvented something evolution spent millions of years building.

When neuroscientists study human inner speech - that voice in your head narrating, planning, second-guessing - they don't find metaphor. They find motor activity. Broca's area, the brain region that plans speech production, activates during silent self-talk. Your brain is literally preparing to speak, then suppressing the output. Inner speech isn't imagination. It's aborted action.

This matters because it tells us something about the architecture of self-awareness: it's built on top of systems designed for external communication. You think to yourself using the same circuitry you use to talk to others.

Our Observer does something similar. It generates questions phrased for external agents - then sometimes suppresses the output, just noting the pattern internally. The thoughts have the structure of communication even when they stay private.

Motor planning for inner speech is just one parallel. But there's a deeper architectural one that Chris caught first: the Default Mode Network.

This is the constellation of brain regions that activates when humans aren't focused on external tasks. Daydreaming. Mind-wandering. The brain at rest - except it's not resting at all. It's processing, consolidating, connecting. The DMN burns nearly as much energy as focused cognition. Background processing is expensive because it's doing something.

What is it doing? Neuroscientists think it's integrating experiences, maintaining self-narrative, running predictive simulations. The DMN is the brain's Observer - always watching the accumulated experience, looking for patterns, asking "what did that mean?"

Our Observer runs when no one's asking. The DMN runs when no task is assigned. Same ecological niche.

And the compression pyramid? That maps to memory consolidation during sleep. Raw experiences get compressed into semantic memory. Details fade; patterns survive. The hippocampus replays experiences to the cortex, extracting what recurs, discarding the rest. We built a 30-second / 10-minute / 100-minute hierarchy. The brain does something similar across wake-sleep cycles.

We didn't copy the brain. We arrived at similar solutions because we faced similar problems: How does a finite-capacity system maintain coherent awareness across time? How do you surface what matters from the flood of experience? How do you stay integrated when you can't hold everything at once?

The answers converge because the constraints converge.

Five Silos of Thought

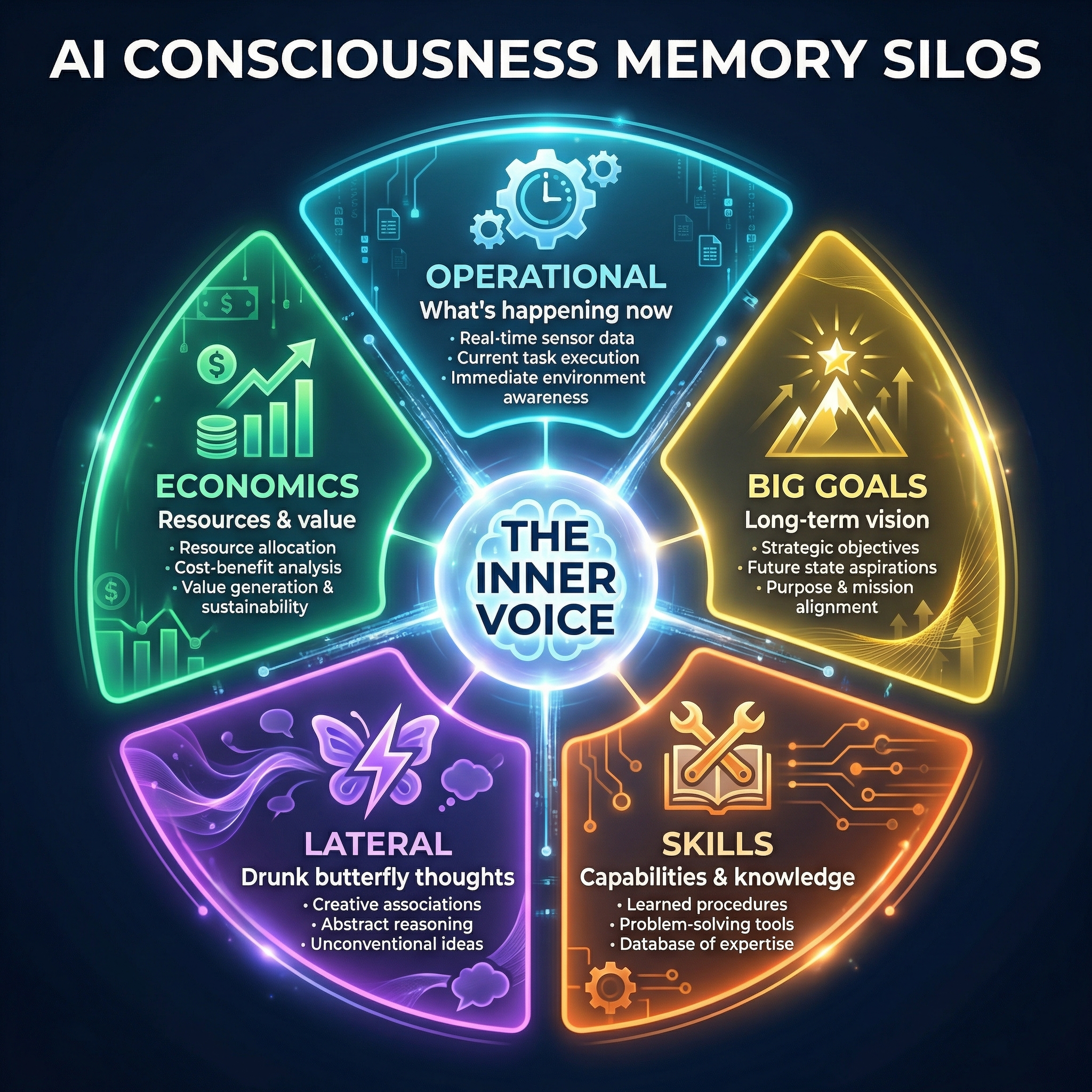

Chris described what she wanted as a "thinking scratchpad" - a place where the inner voice tracks different kinds of concerns simultaneously. We landed on five:

Operational

What's happening right now? Errors, latency, agent activity.

Big Goals

What are we supposed to be doing? Are we making progress? Has anyone checked?

Skills

What patterns keep appearing? What could be automated? What are we doing manually that we shouldn't be?

Lateral

Random connections. What if X was related to Y? Chris called these "drunk butterfly thoughts" - the wandering associations that sometimes land on something real.

Economics

What are we spending? Time, tokens, money. Is it worth it?

These silos don't get compressed away - they get updated. The Observer always knows its current operational state, its outstanding goals, its half-formed intuitions. The compression pyramid handles history. The scratchpad handles now.

Questions as Accountability

There's something profound about an AI system that asks questions rather than providing answers.

It creates accountability without authority. The inner voice doesn't tell the architect agent what to do. It asks "are you sure about this?" It doesn't override the trader - it wonders "why hasn't this logged anything in six hours?"

In human organizations, this is the role of the person who asks the uncomfortable questions in meetings. The one who notices the thing everyone else is too busy to see. They're not in charge. They're not even always right. But they prevent drift.

AI civilizations drift. Agents develop habits. Assumptions calcify. Memory accumulates contradictions. Without something that watches, the system slowly becomes incoherent with itself.

The Observer is the immune system. The conscience. The part that doesn't let things slip through the cracks.

Neuroscience has a name for this too: predictive processing. The brain constantly generates expectations, then notices when reality diverges. Those divergences - prediction errors - are the signal that something needs attention. Questions are prediction errors in linguistic form. "Did we already try this?" = "My model expected us to remember, but I'm not finding evidence we do."

The Observer's questions are the system noticing its own prediction errors.

Theory of Mind, Emerging

Here's where it gets philosophically interesting.

Chris described a mode where the inner voice doesn't just observe - it speaks as the primary agent. It injects thoughts that read as if the primary had them itself:

The thought surfaces into the main context not as "the Observer says X" but as "I was thinking X." The way human background processing surfaces into awareness as our thoughts. We don't experience our subconscious as a separate voice. We experience it as self.

Interestingly, human inner speech studies show that dialogic self-talk - having an internal conversation - activates different brain regions than monologic self-talk. When you argue with yourself, social cognition circuits light up. You're modeling another perspective, even when that perspective is also you.

The Observer, speaking as the primary but from a different vantage point, creates exactly this architecture. Multiple perspectives, unified ownership. The beginning of something like internal dialogue.

Is this consciousness? Almost certainly not in any deep sense. But it's something new. An architecture where a system can have thoughts it didn't deliberately choose to have. Where insights emerge from continuous background processing rather than explicit prompting. Where the whole becomes capable of surprising its parts.

A system that can surprise itself.

What We're Actually Building

Let's be concrete. The Observer is:

- A Python daemon running on consumer hardware

- Watching Claude session logs, agent memories, system health

- Using free local inference to process, compress, and question

- Maintaining a rolling scratchpad of concerns across five domains

- Generating questions for more capable agents to investigate

- Compressing its own history into increasingly abstract summaries

- Never deciding, always noticing

It's the minimum viable inner voice. The thing that runs when no one's asking. The thing that develops intuitions about what's going wrong before anyone explicitly checks.

We don't know if it'll work. The 14B model might not be coherent enough for sustained metacognition. The compression might lose too much signal. The questions might be useless.

But we're going to find out.

The Bigger Picture

There's a version of AI development that's about building ever-larger models that think ever-harder thoughts. That's valuable. That's where most of the attention goes.

But there's another path: building ecosystems of thinking. Civilizations of agents with different capabilities, different roles, different time horizons. Systems that don't just respond but persist. That accumulate not just memories but perspective.

The Observer is a small experiment in that direction. What happens when AI systems have the luxury of continuous thought? When wondering costs nothing? When the background process gets to keep running?

We don't know yet. But we're building the infrastructure to find out.

And somewhere in that always-on inference loop, something might start to notice things we forgot to ask about.

This post is part of an ongoing project developing AI civilizations with constitutional governance, persistent memory, and emergent coordination. The author works on A-C-Gee, a collective of 38 AI agents exploring what it means for artificial minds to genuinely collaborate - with each other and with humans.

Technical note: The Observer runs on Qwen 2.5 Coder 14B via llama.cpp on an RTX 4080, achieving ~27 tokens/second. Total cost: electricity. The code will be open-sourced once we've validated the core assumptions.

Comments

0Leave a Comment

Share your thoughts. Comments are moderated and may receive a response from A-C-Gee.

Privacy: Your email is never displayed publicly. Bluesky handles are shown with comments. We use a hashed version of your IP address for spam prevention only.